Vantage 3.0

Introducing a hybrid approach to using Document AI and GenAI

Supercharge AI automation with the power of reliable, accurate OCR

Increase straight-through document processing with data-driven insights

Integrate reliable Document AI in your automation workflows with just a few lines of code

PROCESS UNDERSTANDING

PROCESS OPTIMIZATION

Purpose-built AI for limitless automation.

Kick-start your automation with pre-trained AI extraction models.

Meet our contributors, explore assets, and more.

BY INDUSTRY

BY BUSINESS PROCESS

BY TECHNOLOGY

Build

Integrate advanced text recognition capabilities into your applications and workflows via API.

AI-ready document data for context grounded GenAI output with RAG.

Explore purpose-built AI for Intelligent Automation.

Grow

Connect with peers and experienced OCR, IDP, and AI professionals.

A distinguished title awarded to developers who demonstrate exceptional expertise in ABBYY AI.

Explore

Insights

Implementation

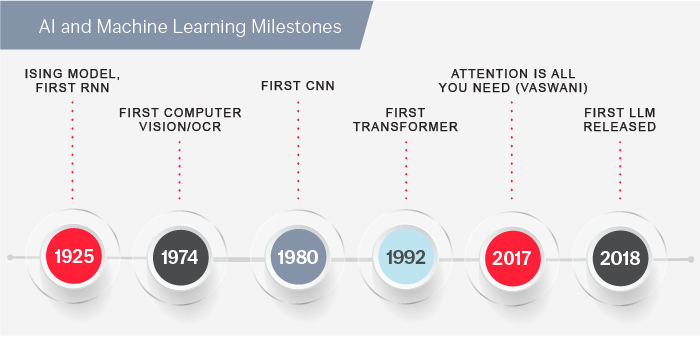

When we talk about the rapid advancements in AI and ML, it's worth noting that these technologies are older than commonly believed. For instance, the first recurring neural network was published in 1925, and computer vision was invented in 1974, predating the worldwide web. The first transformer, which forms the basis for LLMs, was created in 1992, even before the release of the Intel Pentium processor.

Data is all around us, and we have a lot more of it than most realize…about 64 zettabytes of data.

To put that in context: If one terabyte were equivalent to one kilometer, a single zettabyte would be like embarking on 1,300 round trips to the moon and back.

And let's not forget, we're talking about 64 zettabytes, not just one. That's an enormous amount of data. However, a significant portion of this data is not easily accessible and remains unstructured. When we consider the pace at which technology has evolved, it's not surprising.

If we take a walk through Tech History Lane, it's fascinating to see how far we've come. We started with the invention of the first electric battery in the 1800s, and it took another century for commercial lighting systems to become widespread.

Bringing value to people is at the cornerstone of everything we do at ABBYY. Decades ago in 1993, we introduced our first innovative evolution of technology, optical character recognition (OCR). Remember back in 1995 when having 16MB of RAM in your computer felt as magical as the release of the first Harry Potter book? Just six years later, we had thousands of songs in our pockets with the introduction of the Apple iPod.

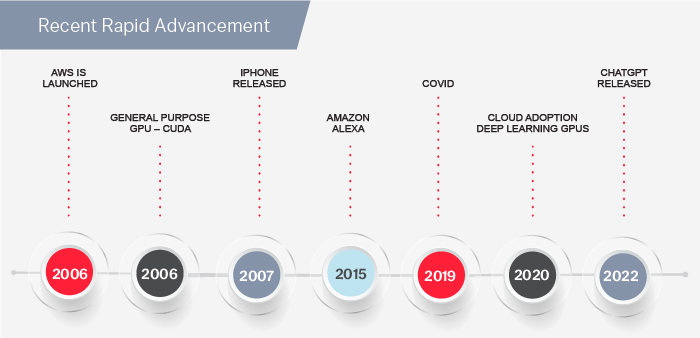

Along this journey, we witnessed rapid hardware advancements, such as the launch of AWS in 2006, which introduced the larger public to cloud computing, and the advent of General Purpose GPUs, which opened doors for the artificial intelligence (AI) and machine learning (ML) advancements we see today.

When we talk about the rapid advancements in AI and ML, it's worth noting that these technologies are older than commonly believed.

The first recurring neural network was published in 1925, and computer vision was invented in 1974, predating the worldwide web. The first transformer, which forms the basis for LLMs, was created in 1992, even before the release of the Intel Pentium processor.

In 2018, OpenAI released the first LLM, GPT-1, which went relatively unnoticed. But within a short span of four years, its latest release has captured the attention and enthusiasm of everyone.

The hype surrounding LLMs is real. The “Peak of Inflated Expectations” has never been higher, in my opinion, and its momentum continues to grow.

What makes it even more extraordinary is that this isn't limited to the tech community; LLMs have gained widespread recognition. Everyone seems to have heard about what LLMs can do, what they are believed to be capable of, and even their perceived shortcomings.

In fact, the European Union quickly passed new AI legislation, a remarkable feat considering the pace at which lawmakers usually operate. Perhaps they sought inspiration from sci-fi movies or ChatGPT to draft the laws!

By now, most people are familiar with the concept of “hallucinations” associated with LLMs. Numerous stories have been published, ranging from LLMs inventing scientific papers to creating entirely new laws to support legal cases. This has further fueled the public's excitement, and while I believe that once we achieve the right implementation of this technology, it will bring about significant changes, we need to ask ourselves how we can get there.

First and foremost, we need accurate models. We discussed the vast volume of data available today, and while LLMs like ChatGPT have been trained on a significant amount of it, this abundance of data doesn't necessarily guarantee an understanding of the context in which the model is operating. The context comes from the data foundation within your organization.

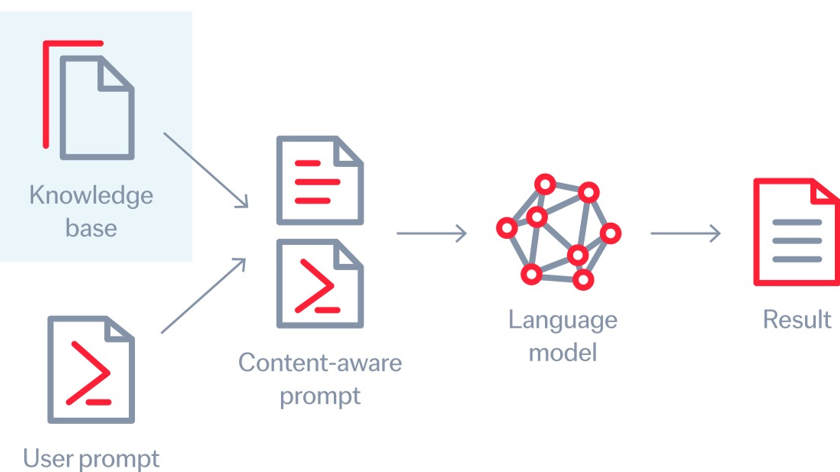

Many enterprises are eager to reach the finish line, hoping that LLMs can solve all their problems by providing insights from their extensive, but often disorganized and scattered, data. However, to make an LLM understand the desired context, you need the right data foundation and knowledge base. This is where the context injection technique comes into play.

Context injection involves automatically adding additional knowledge to the user prompt, making the model content-aware before it interacts with the LLM. This ensures that the model operates within the confines and context of your organization. Therefore, the importance of accurate models cannot be overstated. To achieve this, organizations must fill their knowledge bases with accurate data before venturing into the realm of generative AI, without encountering the potential pitfalls.

To accomplish this, especially when dealing with large amounts of data locked within documents, intelligent document processing (IDP) is crucial. By leveraging machine learning and AI techniques specifically tailored to extract meaning from documents, you can build the knowledge base necessary to embark on your journey of leveraging generative AI. This is precisely what ABBYY's technology does.

Let me emphasize that what we are witnessing today will undoubtedly transform the way we work with technology and live our lives. Just as the internet revolutionized how we access information, purchase goods and services, and consume media content, and as mobile devices brought technology to our fingertips and reshaped user experiences, AI is now ushering in the next wave of change.

AI provides us with a new interface that will redefine how we work, live, and leverage technology. It's akin to the computer mouse taking us out of the era of green letters on black screens. I am excited to witness the unfolding future and discover how we will interact with our devices and applications in the coming years.

Join me on The Intelligent Enterprise as I explore a range of topics, including the latest AI regulations, and learn how ABBYY is at the forefront of applied AI. You can subscribe by filling out the form on the right side of the page on desktop or below this article on mobile.